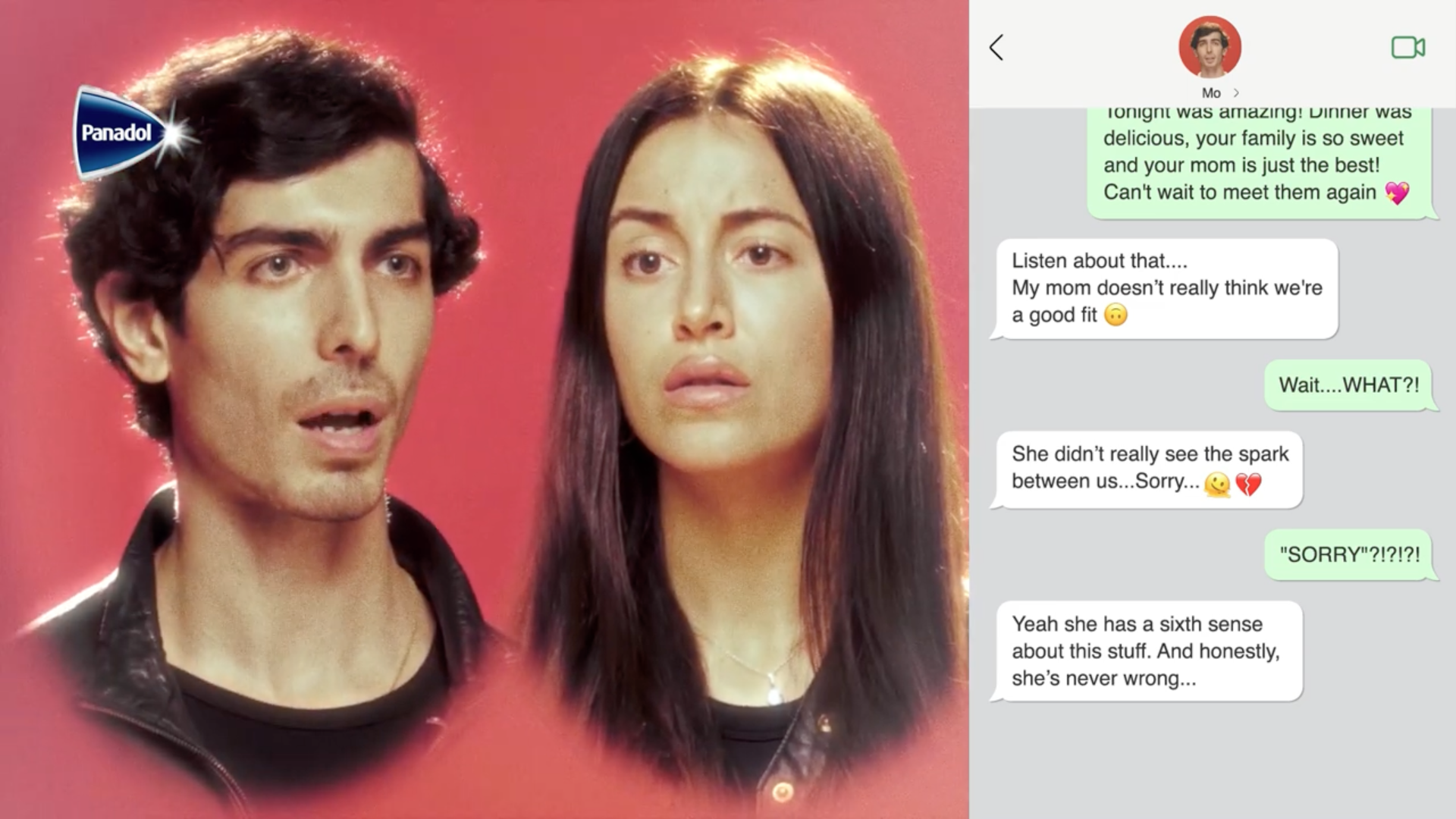

Panadol turns breakup texts into absurd songs for Valentine’s Day

The campaign kicked off with teasers on Instagram and YouTube, inviting people to share their worst breakup texts.

This article was originally posted here by Campaign Middle East.

Valentine’s Day might be the most romantic day of the year, but for the heartbroken and this year, Panadol Extra stepped up once again – not with pain relief for headaches, but for heartbreak, turning brutal breakup texts into hilarious songs.

In partnership with VML Dubai, the brand tapped into the modern breakup trend: ending relationships over text. With humour at its core, the campaign transformed ridiculous breakup messages into kitschy music videos to be shared across social media.

The campaign kicked off with teasers on Instagram and YouTube, inviting people to share their worst breakup texts. Three of the most ridiculous messages were transformed into songs—complete with weird, kitsch music videos.

“This campaign proves that good content can resonate, no matter the brand or industry. We tapped into the viral trend of turning digital comments into music—to highlight just how bad people are at handling love and communication,” said, Marcelo Zylberberg, ACD at VML Dubai.

Show breakups that make no sense, the videos end with the phrase: “heartache is one ache we are still confused about”, implying that for every other ache, Panadol Extra is the one to go for.

“They say ‘one day you’ll laugh about this’—because laughter is the best medicine. We’re just speeding up the process, so people can actually enjoy Valentine’s Day,” added, Fernando Miranda, ECD at VML Dubai.

Building emotional connections

The Panadol Valentine’s Day campaign builds on the brand’s broader brand positioning as a pain relief expert—one that isn’t afraid to acknowledge its limits. Unlike other healthcare brands that take a purely functional approach, Panadol aims to emotionally connect with consumers by tapping into the everyday struggles of modern life—including heartbreak.

This isn’t the brand’s first foray into emotional pain. Last year, Panadol encouraged the heartbroken to “just ignore Valentine’s altogether.” This year, they took things up a notch by addressing toxic breakup culture head-on.

“Last year, we took a bold stance by admitting we can’t fix all kinds of pain, and the response was overwhelming. This year, we’re keeping that energy—tapping into new behaviours and trends,” Areej Yacoub, Marketing Manager at Haleon.

The brand’s goal is to reinforce Panadol Extra’s role in alleviating pain, while also differentiating it within the highly competitive pain relief market. Rather than focusing on traditional product benefits, the brand uses cultural relevance and humour to engage audiences in an authentic way.

“Panadol Extra is part of a very functional market, but still manages to be part of people’s lives in a very organic and human way. That’s a differential for our brand that we believe should be reflected on how we communicate,” Ahmed El Gohary, Regional Brand Manager at Haleon.

Credits:

Agency: VML Dubai

Chief Creative Officer, Dubai: Aricio Fortes

Executive Creative Director, Dubai: Fernando Miranda

Creative Group Head: Louis Moghabghab

Associate Creative Director: Fabio Caveira

Associate Creative Director: Marcelo Zylberberg

Senior Art Director: Balu Puthalath

Copywriter: Zuhair Rahman

Motion Graphics: Simon Menpin

Account Director: Lara Medanat

Senior Account Manager: Amal Salha

Senior Producer: Karim Al Chamaa

Client: Haleon

Brand: Panadol Extra

Marketing Manager: Areej Yacoub

Regional Brand Manager: Ahmed El Gohary

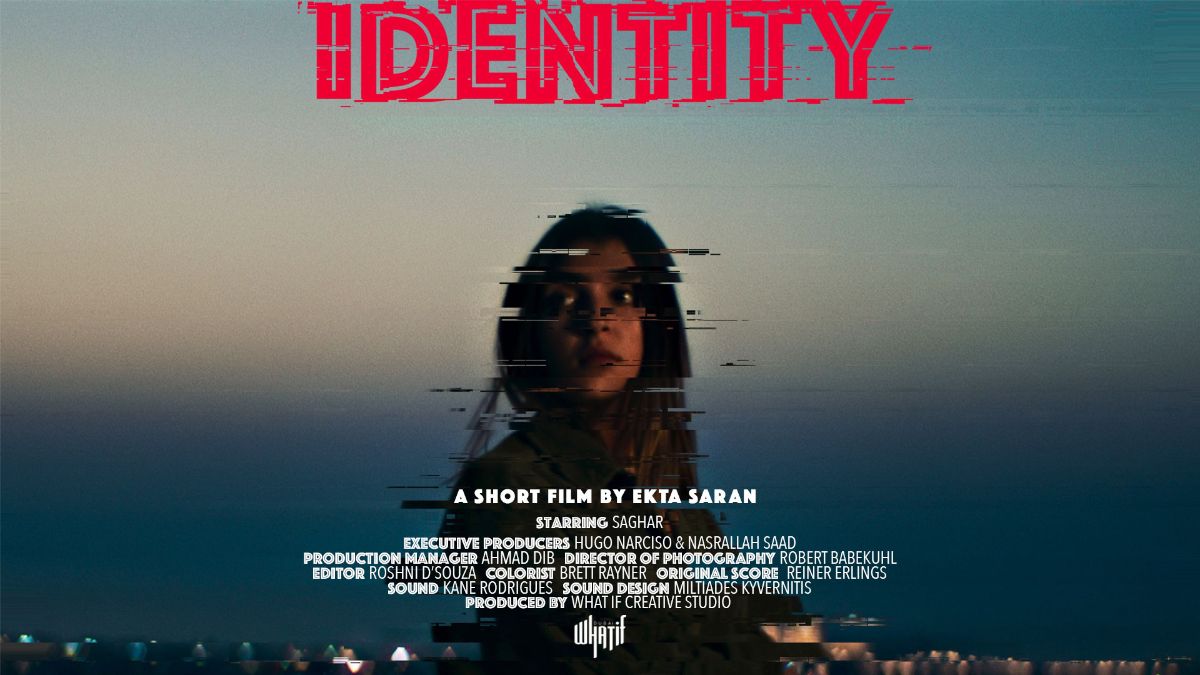

Production House: WHAT IF Creative Studio

Director: Nasrallah Saad

Executive Producer: Hugo Narciso

Producer: Mely Urresti

.svg)

.svg)